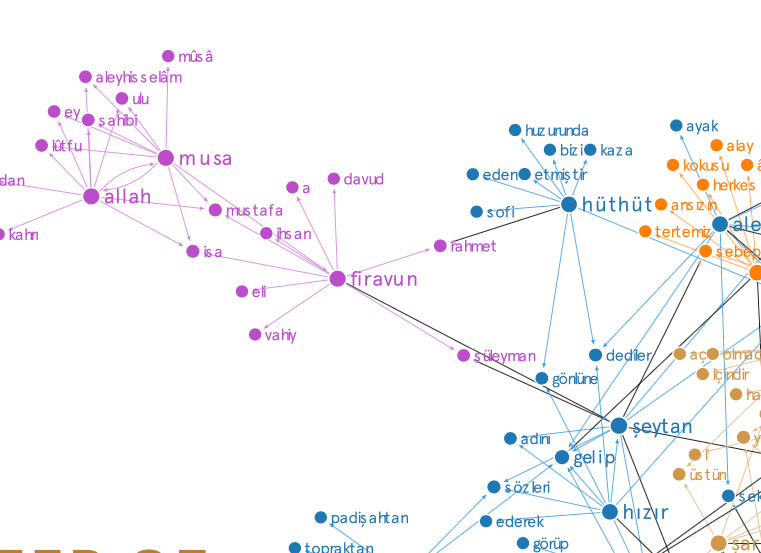

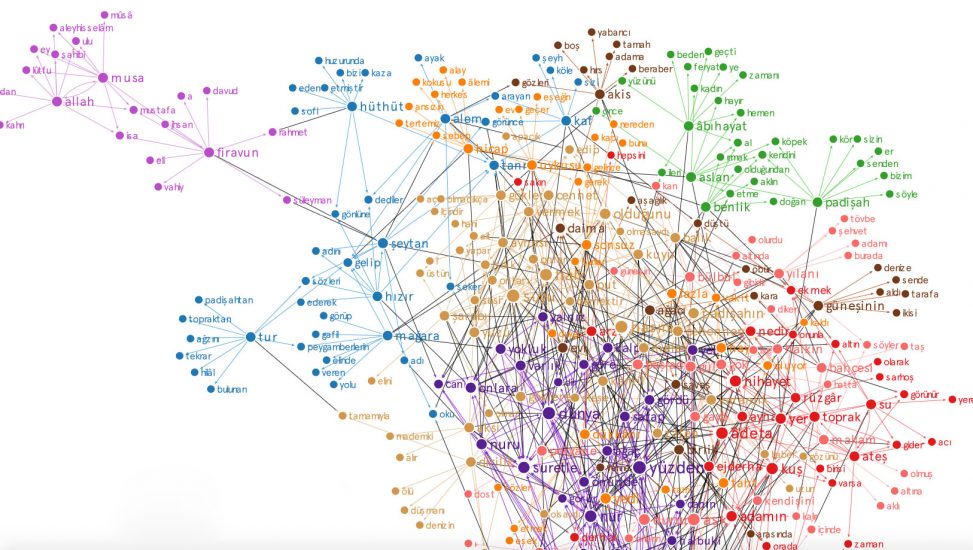

I started a symbol map research applying computational methods on the text of the Mesnevi by Rūmī. My goal was to discover connections as a map of archetypal symbols. When I analyzed a big map of all symbols and clusters took place, Bridge symbols emerged that also linked symbol sets. We can call it symbols of symbols as the main pillars of Mesnevi.

Process: 6 volumes of Mesnevi raw text are collected. Raw text preprocessed for cleaning unnecessary letters , punctuations, numbers and stop-words. Lemmatization algorithm applied. Cosine similarity on word embedding applied to the final version of the text. I predetermined a list of symbolic words that initiated the network first. Total 129 words determined for the initiation of the network. Top 10 most similar words predicted for each. And eventually all are merged and connected in one network. A new connected network file is created for the visualization phase. Then, social network analysis methods are applied such as Betweenness centrality and degree centrality. Betweenness centrality analysis revealed some hub-like behaving symbols in the raw text .

Those are: Sky, Earth, Solomon, Sea, War, Moon, Mount Kaf, Face, Existence, God , Garden

Önal, Ö. (2023). Exploring the Potential of Archetypal Literary Social Networks (ALSN): A Preliminary Case Study of Mathnawi Archetype Network. Retrieved from osf.io/preprints/socarxiv/8z2rg

Lemmatization algorithm

This lemmatization algorithm uses a supervised deep learning approach running on Keras. To optimize the training ability of the lemmatizer model, first I have developed a new lemmatization logic with a certain set of lexical decisions given according to the different lexical families of Turkish words and their meaning. I needed to keep the semantic properties of the words. With this special logic, a new set of training data (with more than 10.000 labeled lemmatization samples) has been set up(10% splitted as test data). A sequence to sequence model by the use of “Long Short Term Memory” is trained with this special data set in Keras. 200 epoch running of this model achieved the best possible lemmatized-root which still can keep the semantical meaning to be able to use in the word-embedding step which runs next.

Comments are closed.